[reading time 5 minutes]

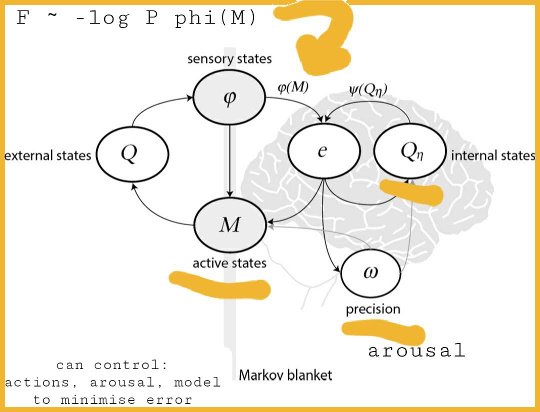

It has been said at least twice recently (by philosophers Patrick Grim and Peter Godfrey-Smith) that consciousness is somehow attributable to "loops" in neural processing. In the jargon of signal processing (as in robotics), this would be called "feedback". In computer science, such processing is called "recursion". Yet neither robots nor computers as we know them are conscious. I have previously proposed that insofar as cognitive loops give rise to consciousness, this could only be done by a system which can map in space and time which then maps itself. Among other things, this is why you must sometimes return to a certain room or area of space in order to recover a thought. From pages 11 and 12 in The Phenomenology of Animal Tracking (link):

After that, what might the next step in conceptual evolution be for such a creature? It could - and this, as I’ll put forth, would be momentous - develop a nervous system to “map” its world - where are the food and predators (at one moment in time - more on this later). I would argue that this would be the most rudimentary brain possible, as - while not conscious - it would lead to the development of consciousness, because once you have a Complex Adaptive System which has invented a way to map, you have one component of a system which can take advantage of folding its processing back in on itself.

I want to briefly distinguish this concept from the more familiar concept of recursion in Computer Science. Recursion is simply the reuse of code or circuitry to either save memory or otherwise make a device or piece of software more compact. It is clever, in a logical way, but it can always be rewritten to accomplish the same goal with non-recursive loops, and it never results in an amplification of processing or abstractive power - in the jargon of Complex Adaptive Systems, it never results in improved schema.

What I’m talking about with maps is, if a system develops an architecture which can keep track of entities in space, then that architecture - itself a collection of entities in space - might further develop into a system which applies its own mapping structures to map itself, thus resulting in a new and more powerful architecture. In the realm of conceptual evolution, then, this is a plausible beginning to how higher-order brains developed from simpler nervous systems. Only a system which is naturally designed to process spatial/temporal data with mapping and memory could possibly take advantage of mapping and memory abstracted internally. No computer as we know it today could do this - could abstract its power by adding more of the same architecture. But I’m getting a little ahead of myself.

Once a creature can map, and make maps with maps of maps, the stage is set for the next major step in conceptual evolution. If you can create a map, then you can modify your map - you can add and delete elements. There is nothing special in this - you go to spatial position Y to retrieve food which you earlier marked at Y. If the food is still there, you eat food at Y, and you erase Y from your map. Or you find the food has already been eaten, so you also erase Y from your map. However, if you begin to use your map to in essence overlay maps in time, you develop the next (and perhaps ultimate as we’ll see soon) ability in the realm of conceptual evolution. You can make predictions. In other words, spatial and temporal processing architecture could be developed in such a way as to take advantage of turning its processing back onto itself, which develops maps into maps of maps, and these into predictive schemata. Now, whereas once such a creature was wholly a Complex Adaptive System by bodily architecture alone, now it is that Complex Adaptive System architecture with another Complex Adaptive System inside of itself - its brain. It is a model of the world, within a model of the world. It is all material. Yet it is mind and body. And that is us.

What I want to add here are two pieces of independent research which corroborate my cognition as maps-of-maps hypothesis. But first, briefly, a note on the term "cognition". If within our minds and bodies we employ both conscious and unconscious cognition, and realms in-between these two as on a spectrum, and we take this spectrum as occurring elsewhere in the evolutionary chain of life, at least in part, by "cognition" here I mean anything on the spectrum which produces some type of qualia or subjective experience. That is, any form of cognition which is not wholly unconscious (non-conscious, robotic, etc).

Now, the first piece of corroborating evidence is from Milner and Goodale's seminal paper on the two-streams hypothesis (link). This research lays out the now well-established fact that after visual data reaches the occipital lobe, it is processed twice: once as a "where" stream of information (the dorsal stream), and separately as a "what" stream of information (the ventral stream). The "where" stream is shown in their paper to process map-type information for vision-sensed objects, and this is done non-consciously. The "what" stream - which yields visual recognition of objects - is processed consciously, with full awareness of the observer, or as John Locke would have it "perceiving that he does perceive".

I propose that - evolutionarily - the unconscious dorsal stream developed first. This is a robotic mapping of one's environment. Once this can be accomplished, the visual processes themselves can be mapped - made amenable to tracking and predicting. This became the ventral stream**, and this is exactly the neural-level definition of conscious processing. In other words, this is what consciousness is - neural mapping architecture applied to the architecture signals themselves.

The second piece of corroborating evidence comes from Jeff Hawkins' intelligence research outfit, Numenta, and their work on the role of grid cells in cognitive processing among cortical regions (link). In a word, their research shows that objects are recognized in allocentric (object-centered) coordinates, where grid cells perform coordinate transforms between object and observer coordinate frames. That object mapping is so central to object recognition is extremely telling in the story of how evolution yielded consciousness, as should be obvious by now from the rest of this post.

The case here is by no means closed. Yet my cognition as maps-of-maps hypothesis is very amenable to testing, both in biological systems as well as more artificial ones. In your own map of technologies and scientific ideas to watch out for, keep this idea pinned.

** - note: work by Dehaene et. al. suggests the conscious/unconscious split between ventral and dorsal streams is not so clear cut, but in a way that suggests only that the recursive mapping mechanisms, while capable of producing consciousness, may also operate in or contribute to unconscious modes of cognition; see Dehaene “Consciousness and the Brain” pages 58-59.