[reading time 10 minutes]

Qualia (Simulation a la Barrett?)

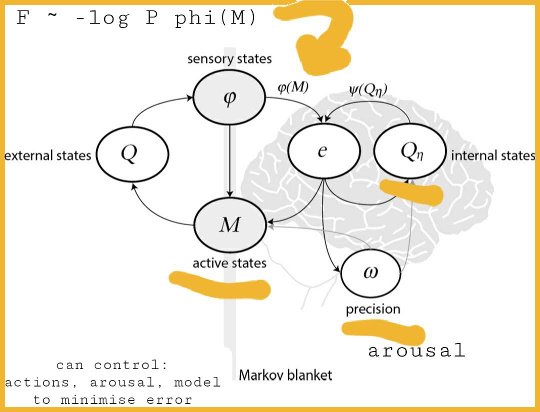

I want to talk about qualia - the way things seem to us, like the taste of wine, or the orangeness of an orange. Some people think qualia is something of a causal dangler, that is to say, the orangeness of an orange is just the way an orange is to us and there really is nothing more to say about it. I disagree. Qualia serves a purpose. I'm going to conclude that qualia is a veneer that simplifies the world for us, because at the smallest scales of reality there is just too much information for us to digest. So let's get there.

I've already talked about how the external world is modeled within us using theories such as Jeff Hawkins' Hierarchical Temporal Memory (link). What I want to add is that "the world" also includes our internal bodily selves - we need to model ourselves as an object in this world as well, particularly our internal energy and status signals (our interoceptive network). This is the insight of Lisa Feldman Barrett in her book How Emotions are Made: The Secret Life of the Brain. In fact, she concludes, emotions are the qualia of our internal world, in direct analogy with the visual/auditory/etc qualia derived from the external world.

Only she doesn't use the word qualia. This seems to be a bridge too far in her book. For example, her constructionist view of visualizing a bee begins with the full qualia of bright/dark blobs, and ends in the full qualia of a bee. I insist on taking this one step further: visualizing a bee begins in fully non-conscious, raw, utterly non-qualia'd light frequencies, perhaps proceeds to bright/dark blobs or other intermediate steps, and finally ends in the full experience of a bee, all via the same constructionist principles invoked by Dr Barrett. In this way, the philosophical "problem" of qualia is solved. Qualia equate to what Dr Barrett calls simulation, or concepts, aka prediction. The way the world seems to us is our low-fidelity prediction of the world (which includes our internal selves), such that we can process an incredibly complicated reality.

Let me pause to emphasize how awesome Dr Barrett and her research are. The insight that we model our internal world like an external world (or rather, that we model them with the same methods) is crucial to understanding consciousness itself. Consider Artificial Intelligence. All AI is first trained using Machine Learning techniques. All machine learning is a mathematical optimization problem - one must provide a truth dataset or simulation on which to train the AI. But what is truth to a living being which must bootstrap itself in the world? At the level of our interoceptive network, "truth" is simply whatever optimizes our internal states, from an evolutionary fitness standpoint (see this post on the conceptual evolution of consciousness). This network, being evolutionarily first, provides an optimization framework for our higher-order (evolutionarily more advanced) senses and qualia, such as vision and hearing.

Another amazing insight from Dr Barrett is humanity's use of words to spread concepts amongst ourselves. That is, rather than having to model every piece of the world from scratch, we can (and inevitably do) copy the way those around us have modeled the world as a starting point within ourselves. This explains the centrality of language in what makes us human, and the ability of words to store cultural energy, as I've said here (link).

Locke as Constructionist

Having rightfully praised Dr Barrett, let me tally one more criticism. In at least three whitepapers, Dr Barrett lumps philosopher John Locke into the essentialist camp, but as I'll show, John Locke is squarely a constructionist, especially when it comes to Dr Barrett's particular field of study, emotions. That Locke has been mistaken as an essentialist is a misunderstanding of Locke's comments on Aristotle (as Nigel Leary argues in How Essentialists Misunderstand Locke). This is ironic since Dr Barrett corrects similarly egregious misunderstandings in the name of Charles Darwin and William James.

So what does Locke himself say about emotions? Here is Locke in Book II, chapter 20, paragraph 14 of An Essay Concerning Human Understanding,

These two last, envy and anger, not being caused by pain and pleasure simply in themselves, but having in them some mixed considerations of ourselves and others, are not therefore to be found in all men, because those other parts, of valuing their merits, or intending revenge, is wanting in them.

Thus emotions are not universal or based in essences, but are rather constructed by our societal concepts. Here, "pain and pleasure" for Locke are his version of affect, which he further refines as "uneasiness" and "desire". From Book II, chapter 21, paragraph 31,

All pain of the body, of what sort soever, and disquiet of the mind, is uneasiness: and with this is always joined desire, equal to the pain or uneasiness felt; and is scarce distinguishable from it.

These are even specifically associated with body budgeting concerns (Book II, chapter 21, paragraph 34),

And thus we see our all-wise Maker, suitably to our constitution and frame, and knowing what it is that determines the will, has put into man the uneasiness of hunger and thirst, and other natural desires, that return at their seasons, to move and determine their wills, for the preservation of themselves, and the continuation of their species.

Further on the construction of emotions ("passions") from affect ("uneasiness/desire") - Book II, chapter 21, paragraph 40,

But yet we are not to look upon the uneasiness which makes up, or at least accompanies, most of the other passions, as wholly excluded in the case. Aversion, fear, anger, envy, shame, etc. have each their uneasinesses too, and thereby influence the will. … Nay, there is, I think, scarce any of the passions to be found without desire joined with it.

Here is Locke, in 1689, fully anticipating constructionism, even psycho-physiological affect, and we are not to discredit Locke for calling these by different names, as he did not benefit from the wealth of cultural knowledge in the form of 20th century words (an amazing example of the power of words-as-tool).

Once more, from Book II, chapter 21, paragraph 46,

The ordinary necessities of our lives fill a great part of them with the uneasinesses of hunger, thirst, heat, cold, weariness, with labor, and sleepiness, in their constant returns, etc. To which, if, besides accidental harms, we add the fantastical uneasiness (as itch after honor, power, or riches, etc.) which acquired habits, by fashion, example, and education, have settled in us, and a thousand other irregular desires, which custom has made natural to us, we shall find that a very little part of our life is so vacant from these uneasinesses, as to leave us free to the attraction of remoter absent good.

It's time to give Locke credit where credit is due.

So there it is. Qualia is the way in which a consciousness - a tiny subset of a world - can know things about that world, including itself. Qualia is constructed within us for this purpose. Locke knew as much. Only he didn't have all the right words for it ;-)

Simulation a la Bostrom (post-script)

One final note on simulation... "Simulation" as used above refers to one of the ways Dr Barrett describes the prediction mechanisms of our brain. These days in Philosophy, however, using the word "simulation" is likely to evoke the paper Are You Living in a Computer Simulation? by Nick Bostrom. That is not what is meant above. But now that I've brought up Bostrom's paper, let me answer the question posed by its title: no, we are not living in a computer simulation. My counter argument is as follows. Using Bostrom's own template, we are either the most advanced intelligence in the universe, or we are not. Let's call the probability of either 50/50, since we do not have evidence either way to moderate that starting probability. It follows, then, there is an enormous chance we are as ants living under foot of an advanced intelligence that is not our own. But it can not be both likely that we are a simulation of our progeny's making and likely that our intelligence is subordinate to an alien superintelligence, lest we are in a simulation of alien design, or would have our lives interceded upon by that intelligence in some other way (or not, but that is only part of the larger probability). This paradox of possibilities stems from the fact that Bostrom's template begins in fantasy, and is not sufficiently mediated by fact. If we extrapolate our future from unmitigated imagination, then the limiting factor is not fact, but just that - imagination.